Lifting the Curtain: Why Transparency and Accountability are Crucial in AI

Unpacking the implications of Generative AI (Gen AI) and how we innovate responsibly with Geotab Ace

This is part of a Geotab series on Responsible AI. It leverages recent work on generative AI, spanning the launch of Geotab Ace and the accompanying Responsible AI Whitepaper, our Gen AI Maturity Index, and this blog on ‘Explainability’ and ‘Interpretability’ in AI.

Artificial intelligence (AI) is rapidly transforming the commercial transportation industry. Telematics systems, powered by AI, are revolutionizing fleet management by providing valuable insights into driver behavior, vehicle performance, and route optimization. This data-driven approach not only improves operational efficiency but also enhances overall safety for commercial drivers.

That's where the principles of accountability and transparency come in. These principles are essential for building trust in AI and ensuring that it's used for good.

Transparency and Accountability: The Foundation of Ethical AI

- Transparency: Imagine a magic trick. You see the result, but you don't know how it happened. AI can sometimes feel like that. Transparency means making sure that we understand how an AI system works. It's like having a behind-the-scenes look at that magic trick. We need to know what data the AI uses, how it makes decisions, and what its limitations are. This is important because it allows us to identify potential biases or errors and helps us to trust the system's outputs.

- Accountability: If something goes wrong with an AI system, who is responsible? Accountability means having clear lines of responsibility for the decisions made by an AI system. This could include the developers who created the system, the organizations that use it, and even the individuals who are affected by its decisions. It's about making sure that someone is answerable for the AI's actions.

But accountability goes even deeper than that. It's not just about assigning blame if something goes wrong. It's about building responsibility into every stage of the AI lifecycle. This is where human oversight plays a crucial role.

Human Oversight: The Guiding Hand

We believe that AI is a powerful tool that should always be used with human oversight. This means:

- Responsible procurement: We carefully select AI systems that align with our ethical and sustainability principles.

- Ethical development: Our AI systems are designed and built with fairness, transparency, and accountability at their core.

- Careful deployment: We implement AI systems in a way that minimizes risks and maximizes benefits.

- Ongoing monitoring: We continuously evaluate our AI systems to ensure they are performing as intended and addressing any unintended consequences.

To further strengthen accountability, organizations can utilize external scrutiny. This means:

- Independent audits: Having external experts assess our AI systems to ensure they meet ethical standards.

- Governmental monitoring: Working with regulators to ensure compliance with relevant laws and guidelines.

- Public transparency: Communicating openly with the public about our AI systems and how we are using them.

Opening the Black Box: Making AI Transparent and Accountable

Without transparency, accountability, and human oversight, AI can become a "black box" – making decisions that we don't understand and can't explain. This can lead to a number of problems, including:

- Bias and discrimination: AI systems can perpetuate and even amplify existing biases in our society. If we don't understand how an AI system is making decisions, we can't identify and correct these biases.

- Lack of trust: If people don't understand how AI works, they may be less likely to trust it. This can limit the potential benefits of AI and create fear and uncertainty.

- Misuse and abuse: Without accountability, AI can be used for harmful purposes, such as surveillance or manipulation.

Operationalizing Ethical AI

- Explainable AI (XAI): Researchers are developing techniques to make AI more transparent and explainable. This involves creating AI systems that can provide clear explanations for their decisions, in a way that humans can understand.

- Auditing and monitoring: Regularly auditing AI systems can help to identify potential problems and ensure that they are being used responsibly.

- Ethical guidelines and regulations: Developing clear ethical guidelines and regulations for AI can help to ensure that it is used for good and that those who develop and deploy AI systems are held accountable.

- Public education: It's important to educate the public about AI, its potential benefits, and its risks. This will help to build trust in AI and ensure that it is used in a way that benefits everyone.

By embracing the principles of accountability, transparency, and human oversight, we can help to ensure that AI is used responsibly and ethically, creating a future where AI benefits all of humanity.

Our commitment to human-centric AI is exemplified in Geotab Ace, our virtual assistant designed to democratize data. Ace provides users with a feedback option directly within the interface, allowing them to report content, suggest improvements, or flag potential or issues. Ace is built with a human-in-the-loop approach, meaning the system does not make decisions or take actions on its own; it empowers users with information and insights to make informed decisions.

Subscribe to get industry tips and insights

Cyber Risk Advisor and Data & Technology Enthusiast with over a decade of experience delivering transformative projects in the financial services and insurance industries. As a respected privacy and AI risks advisor, FX leads the development of comprehensive action plans to address privacy risks in the AI era. With a strong background in privacy, data protection, and IT/IS business solutions, FX is also passionate about fintech, insurtech, and cleantech. He combines his technical expertise with excellent communication and relationship management skills to guide his business partners to success.

Table of Contents

Subscribe to get industry tips and insights

Related posts

Dash cams that protect driver privacy without missing key events

April 24, 2025

4 minute read

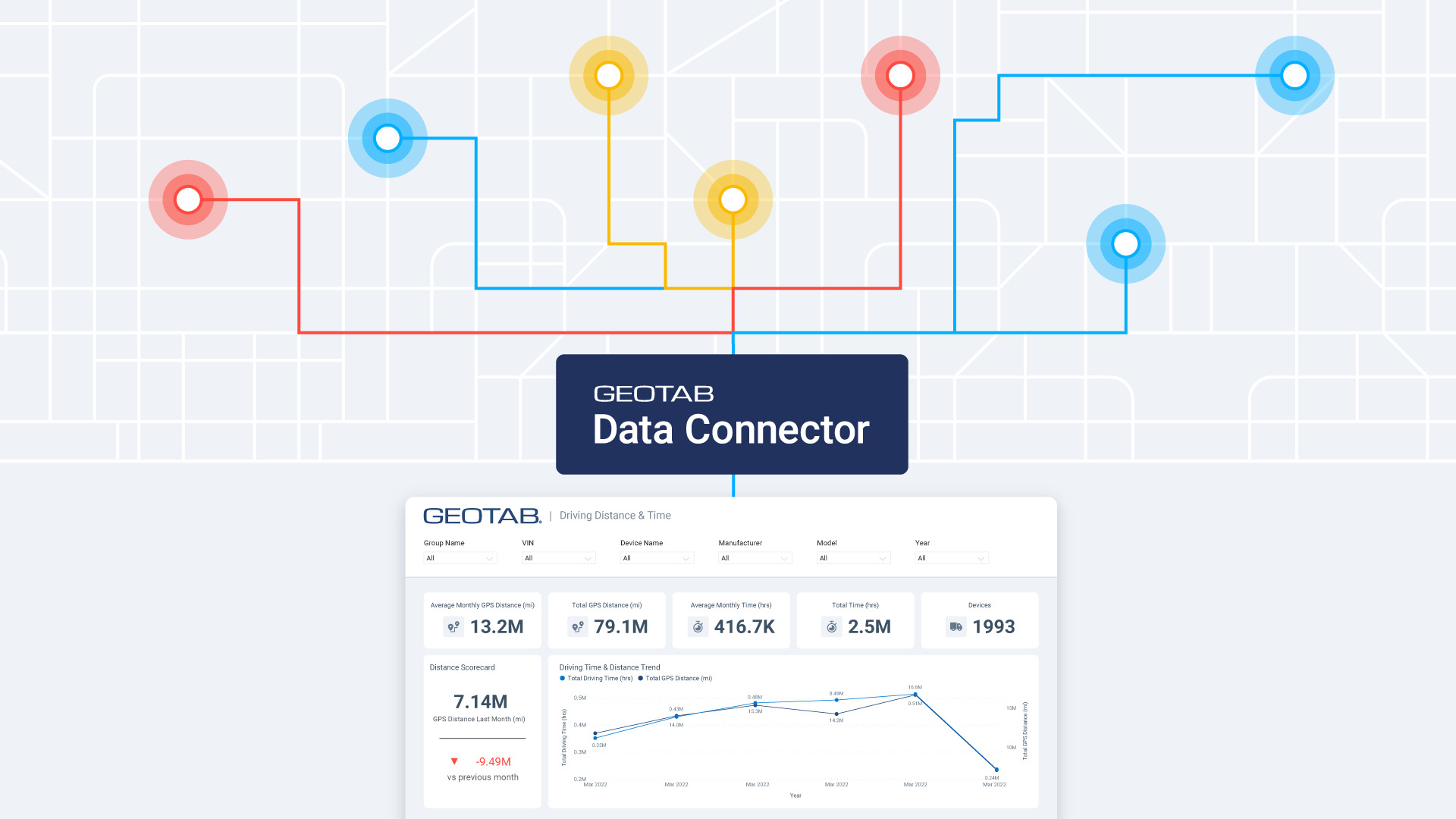

Smarter Municipal Fleet Management with Geotab Data Connector

April 22, 2025

3 minute read

Marketplace Spotlight: From Chaos to Clarity, Innovating Fleet Claims with Xtract

April 15, 2025

1 minute read

Unlocking Safer Roads: How Behavioral Science and Technology Are Improving Driver Safety

April 14, 2025

2 minute read

What is government fleet management software and how is it used?

April 10, 2025

3 minute read

.jpg)